Le fichier robots.txt: une « source de pure jus SEO » qui ne demande qu’à être débloquée.

Les fonctionnalités de Better Robots.txt:

- Vous permet d’autoriser (ou non) 15 moteurs de recherche parmi les plus populaires à explorer votre site Web;

- Détecte votre index de Sitemap (sitemap-index.xml) généré par le plugin Yoast SEO, ou tout autre sitemap, et l’ajoute automatiquement à votre fichier Robots.txt;

- Permet l’ajout de vos propres sitemaps;

- Permet l’édition de votre fichier robots.txt afin d’ajouter des règles personnalisées;

- Permet de définir un délai d’indexation global pour les robots des moteurs de recherche;

- Bloque l’exploration de votre site web par les robots malveillant (100).

- Bloque les scans de backlinks (et les cache de la compétition)

- Permet d’éviter l’indexation de liens inutiles générés par WooCommerce

- Évite les boucles d’indexation infinies générées par certaines fonctionnalités Web (calendriers, search log, …)

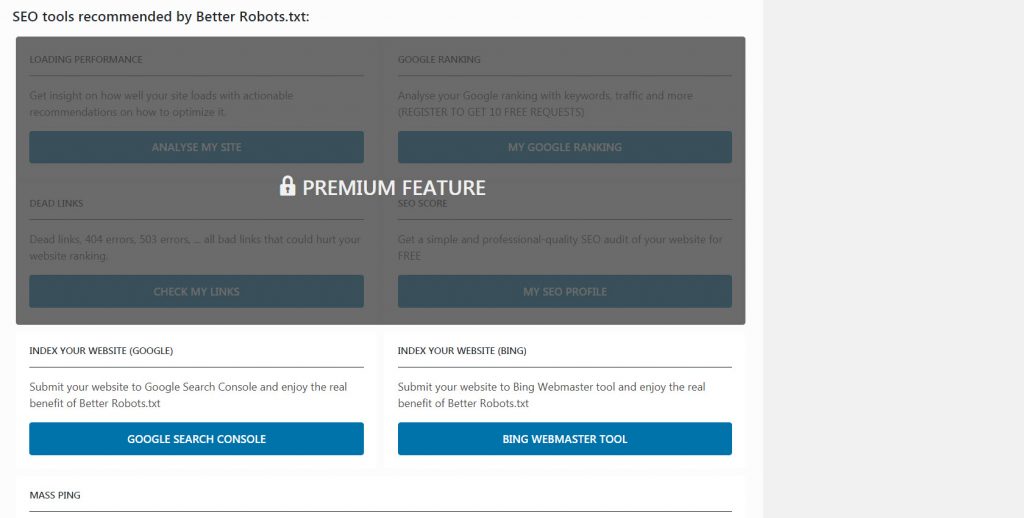

- Met à disposition plus de 150 outils de Growth hacking

- Et plus…

Le plugin Better Robots.txt ajoutera également des règles standards dans votre fichier robots.txt pour optimiser les « budgets d’exploration » des moteurs de recherche en leur informant de ne pas explorer les parties de votre site qui ne sont pas affichées (par défaut) au public.

Vous voulez vous familiariser avec la syntaxe utilisée dans le fichier robots.txt, consultez: Basic robots.txt guidelines by Google.

Comment optimiser Better Robots.txt et votre robots.txt?