Video Embedder with transcription (SEO) The latest generation of Video player for SEO performance

The Vidseo plugin was designed to revolutionize the way video is used on the Web in general.

The main problem associated with videos is that their content, which is narrated in the video, does not constitute indexable material by search engines (and this, despite a video sitemap).

In other words,

- when you embed a video on Youtube or Vimeo, the most important elements allowing it to generate organic visibility (and thus to be visible in the SERPs), are the title and the description you integrate in the video.

- When you embed a video from Youtube or Vimeo on your website, the only positive factor that will be generated, in terms of SEO, is the session time on your different pages. Which, for a given keyword, can increase your ranking in general.

Video is a very popular material on the Web, in terms of conversion. However, as it stands, its effectiveness in terms of organic SEO, and therefore performance in the SERPs, is one of the weakest.

Now, imagine that all the content developed in the video, in text form, becomes usable / indexable when you embed this video in your web page? Imagine you are using a 5-10 minute video showing your service offering & your products and everything you say (in this video), in addition to what your page contains (as text), could be integratd textually on your page, elegantly and discreetly (UX)?

This is exactly what Vidseo allows you to do.

Now imagine the COLOSSAL amount of text that could be used from all videos published on Youtube and Vimeo, considering that EVERY minute, over 500 hours of content is put online? We are talking here about ORIGINAL content, never indexed beforehand by search engines.

Let’s go even further. Now imagine, using this type of feature, a website consisting only of videos? No more texts, no more images, … only videos, integrating video transcripts to generate organic SEO.

How do you think search engines will consider your page? It’s a safe bet that they will see a top quality reference given the amount of original content related to your service offer.

How to skyrocket your SEO Website with video transcripts?

Vidseo plugin offers a completely new way of generating top quality content for your SEO because it allows you to integrate your transcripts automatically generated by Youtube into your videos (or all the ones you find), but it also allows you to edit, correct, optimize and format them (with markup, layout), elegantly and fully indexable, without harming user experience (UX).

The advantage of Vidseo is that it allows you to control how these video transcripts are presented to your visitors. You can :

- Deploy an extract, whose size you can define, which will appear below the video, which on a single click, will display the entire transcript

- Deploy the entire transcript directly below the video

- Hide the transcript from your visitors, even if it is directly displayed in your web page’s (HTML)

The other advantage is to allow the editing of content automatically generated by Youtube (transcription), which is not necessarily reliable. In this sense, you can rewrite the text, format it with paragraph, embed title tags that will help your SEO, as well as links and any other details that you could use in a standard page layout.

Vidseo plugin allows you to do what no other plugin on WordPress or even Youtube(!) is able to do. Allow the effective indexing of the content developed in your videos. Millions of hours of content available to generate SEO like never before!

How to use Vidseo plugin? Skyrocket your SEO with Video transcription

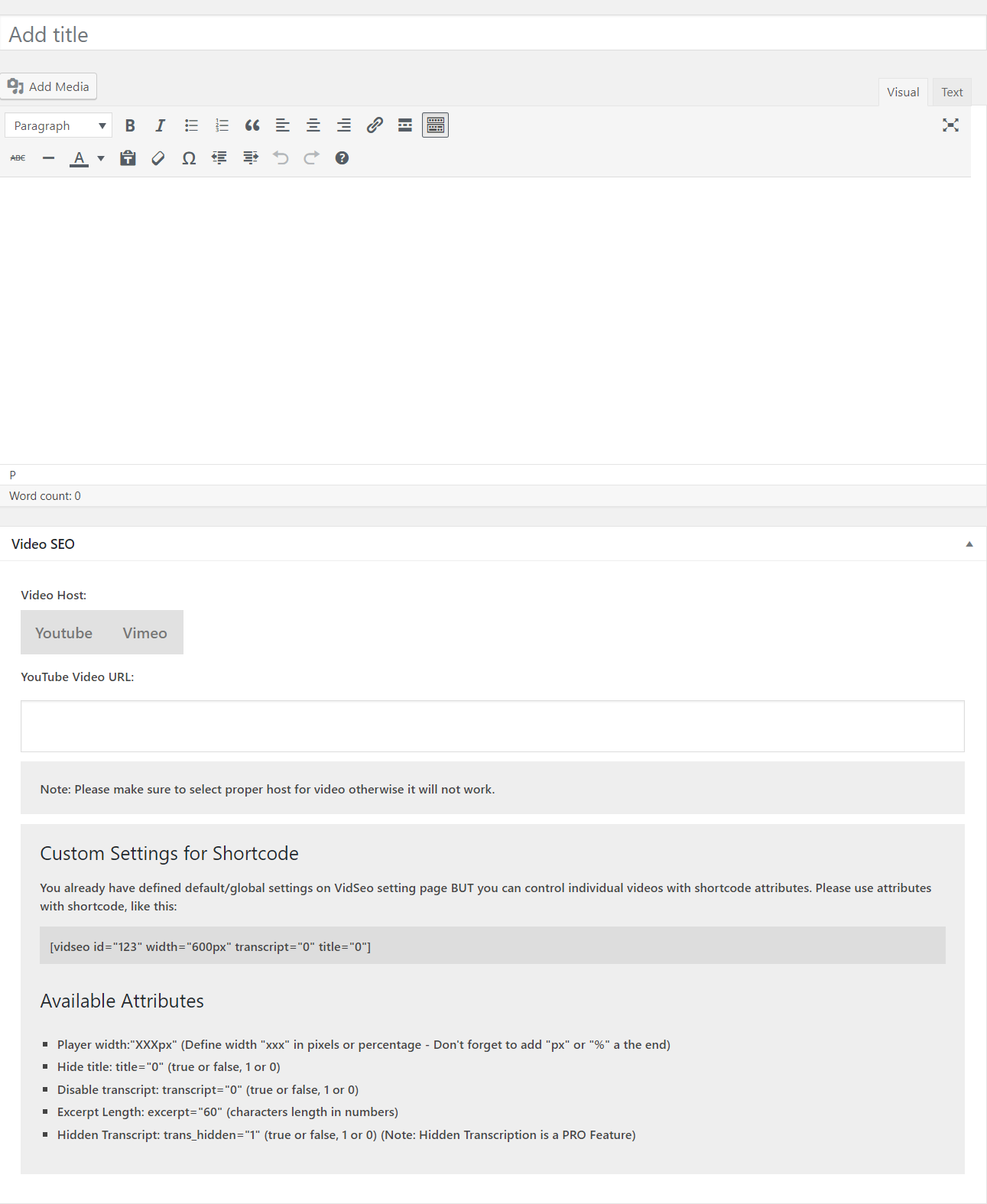

Once installed, go to Vidseo post type section & create your first shortcode:

- Copy-paste your Video URL from Youtube/Vimeo

- Define where this video is from (Youtube/Vimeo)

- Insert your content or transcription

- Customize your content – (HTML edition available only with PRO version)

- Provide a title

- Copy-paste shortcode to your pages/posts/products

- Customize your shortcode with attributes (meaning that you can display your video with other setting than defined on VidSEO setting page)

Available Attributes

- Player width:”XXXpx” (Define width “xxx” in pixels or percentage – Don’t forget to add “px” or “%” a the end)

- Hide title area: title=”0″ (true or false, 1 or 0)

- Disable transcript: transcript=”0″ (true or false, 1 or 0 | If you want to use VidSEO as regular player)

- Excerpt Length: excerpt=”60″ (characters length in numbers)

- Hidden Transcript: trans_hidden=”1″ (if you want to hide transcription to users, but keep it in backend for search engines)

Example: [vid-seo id="123" width="600px" transcript="0" title="0"]

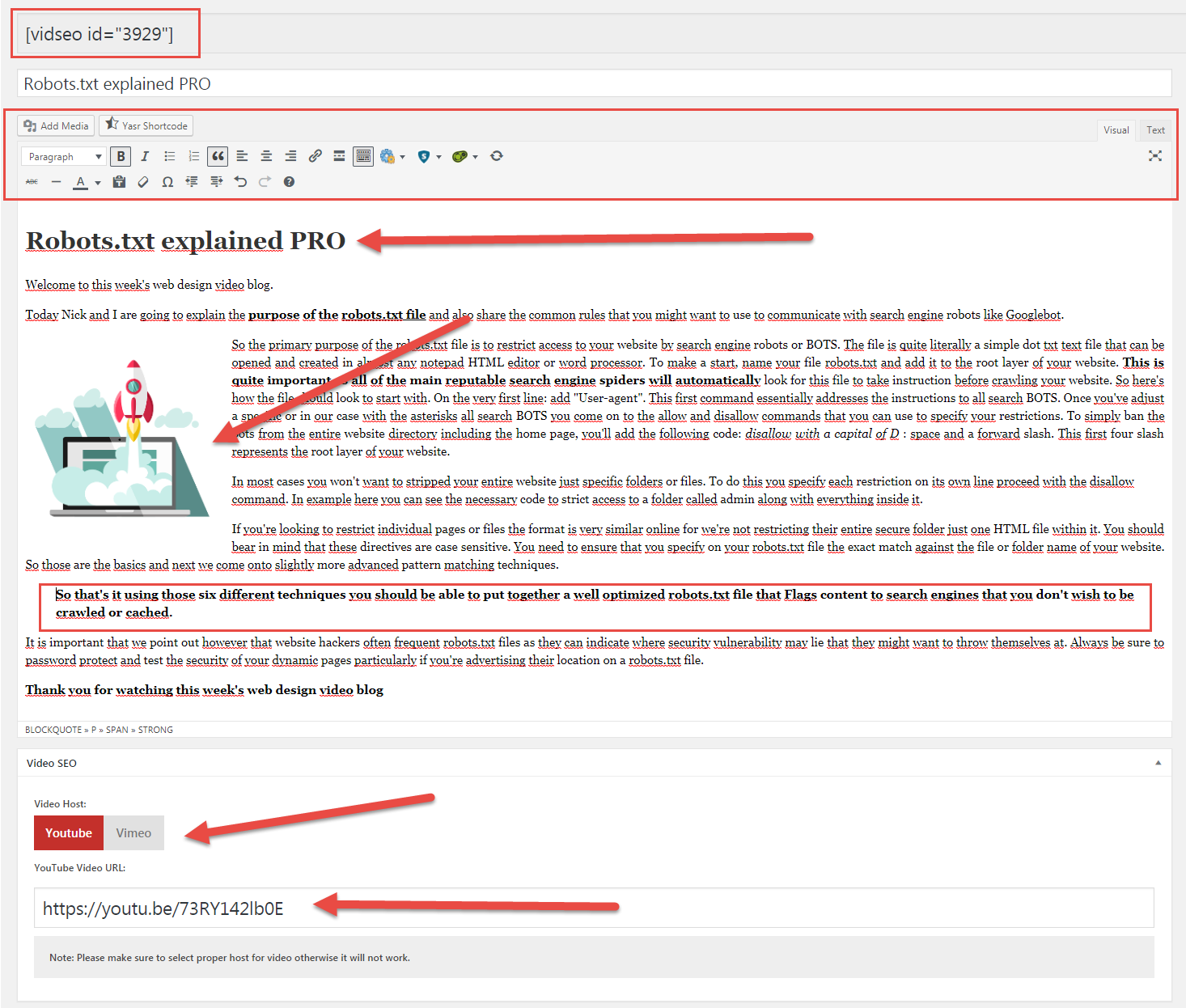

VidSEO PRO with HTML editor

1. Make your first shortcode (FREE) Provide transcript, add Video URL and select host for Video (FREE version)

VidSEO player is very easy to use. Once your content is added in Textarea (no formatting allowed), simply add your video URL (from your own channel or any other video found on Youtube/Vimeo), make sure to select proper host for video, and save.

VidSEO plugin will provide a shortcode to copy-paste in your page, post, product, … Please note that custom attributes (for shortcode) are available with FREE version.

2. Make your first shortcode (PRO) Provide transcrip, format it with headings, links, images, add Video URL and select host for Video (PRO version)

VidSEO plugin PRO provides much more flexibility. You have access to Classic editor to format your content with Headings, links, images and more. Also, you can choose to hide transcript to users (on frontend) while the content is available in backend for search engines.

If needed, you also have access to modifyng tital area and transcription box background.

Please note that custom attributes are also available with PRO version.

Variations - Vidseo plugin What could be done by VidSEO plugin ?

Now that you understand how to benefit from Vidseo for your own content, by using video transcription to generate organic traffic to your site, imagine the amount of information available on Youtube, and especially, all the content yet to come!

Millions of hours of content that you can use, directly (via the excerpt) or indirectly (by hiding the transcript) to boost your SEO and of course, the visibility of your services and products.

On the Web, the two most important elements these days are:

- Content, because that’s what allows you to generate SEO and organic traffic to your site (Content Marketing)

- Video, because that’s what generates the most conversion (and interaction) in general on the web and social media, and which, if integrated into a website, tends to increase session time (positively impacting your ranking in Google for a given SEO). SEE HERE

The content is so important that, in reality, it’s this one that makes sure your video generates SEO (Google, Youtube, …), via the title and description you indicate in Youtube / Vimeo when you create your video. Content is KING, it’s well known.

That’s why the best SEO strategies are always accompanied by content production, because it’s the only thing that allows search engines to understand your site or your web pages, to generate SEO, and to offer them to Internet users when a request coincides with this one.

However, it was never possible to combine text AND video for SEO purposes. Content and video have always been considered as two separate entities, requiring a different strategy.

It’s now a thing of the past.

How to get Youtube Video transcription 2 simple methods very effective

METHOD 1: generate transcript of a Youtube Video

- Go to YouTube and open the video of your choice.

- Click on the More actions button (represented by 3 horizontal dots) located next to the Share button

- Now click on the Transcript option

- A transcript of the closed captions will automatically be generated

- Copy the content and paste it to Vidseo FREE editior or Vidseo PRO visual editor.

METHOD 2: Diycaptions – Youtubevideo captions & subtitles

This second method is quite simple and easy to use. It is third-party software and doesn’t affiliate with YouTube, plus free to use.

- Step 1: Go to the DIY Captions website: https://www.diycaptions.com/

- Step 2: Enter the YouTube video URL in the given box and to get the full text of the audio click on Get Text button.

- Step3 : Copy the content and paste it to Vidseo FREE edition or Vidseo PRO visual editor.